You don’t need to become (or hire) a data scientist to have a data-driven organization. This was a key takeaway from the Data on Purpose conference recently hosted by Stanford Social Innovation Review (SSIR). This message resonates with us at Learning for Action because, in our years of working with social sector organizations, we’ve come to understand that data use should be embedded in the practices and culture of an organization.

There were so many excellent speakers who presented on building data capacity, and here I highlight three of my favorite presentations. Two of them also emphasized the importance of attending to power and equity in your journey to become a data-driven organization. Given the history of using data to perpetuate injustice, it is critical to bring an equity lens to the ways we engage with data as well as with the technologies that harness that data.

Data Capacity-Building in the Civic Sector

An essential first step in becoming a data-driven organization is to understand where your organization is on its data use journey. Kauser Razvi, principal of Strategic Urban Solutions, shared a framework she used to assess the data capacity of nonprofits in Cleveland: the data maturity framework (developed by DataKind and Data Orchard in the UK). The framework outlines different stages of working with data that an organization progresses through: unaware, nascent, learning, developing, and mastering. Each of the stages is broken down by the following themes: leadership, skills, culture, data, tools, uses, and analysis.

The below table includes the stages for Leadership. What I love about this framework is that organizations can use it to self-assess where they are on their data journey, and pinpoint specific areas where they should focus attention and resources. In the Cleveland case, Kauser and her team used organizations’ self-assessments to understand the capacity-building needs for the whole nonprofit field, and to develop interventions tailored to each organization. You can find the whole framework here.

Social Sector Data Maturity Framework

Data Maturity: Leadership Stages

Is your data undermining your mission?

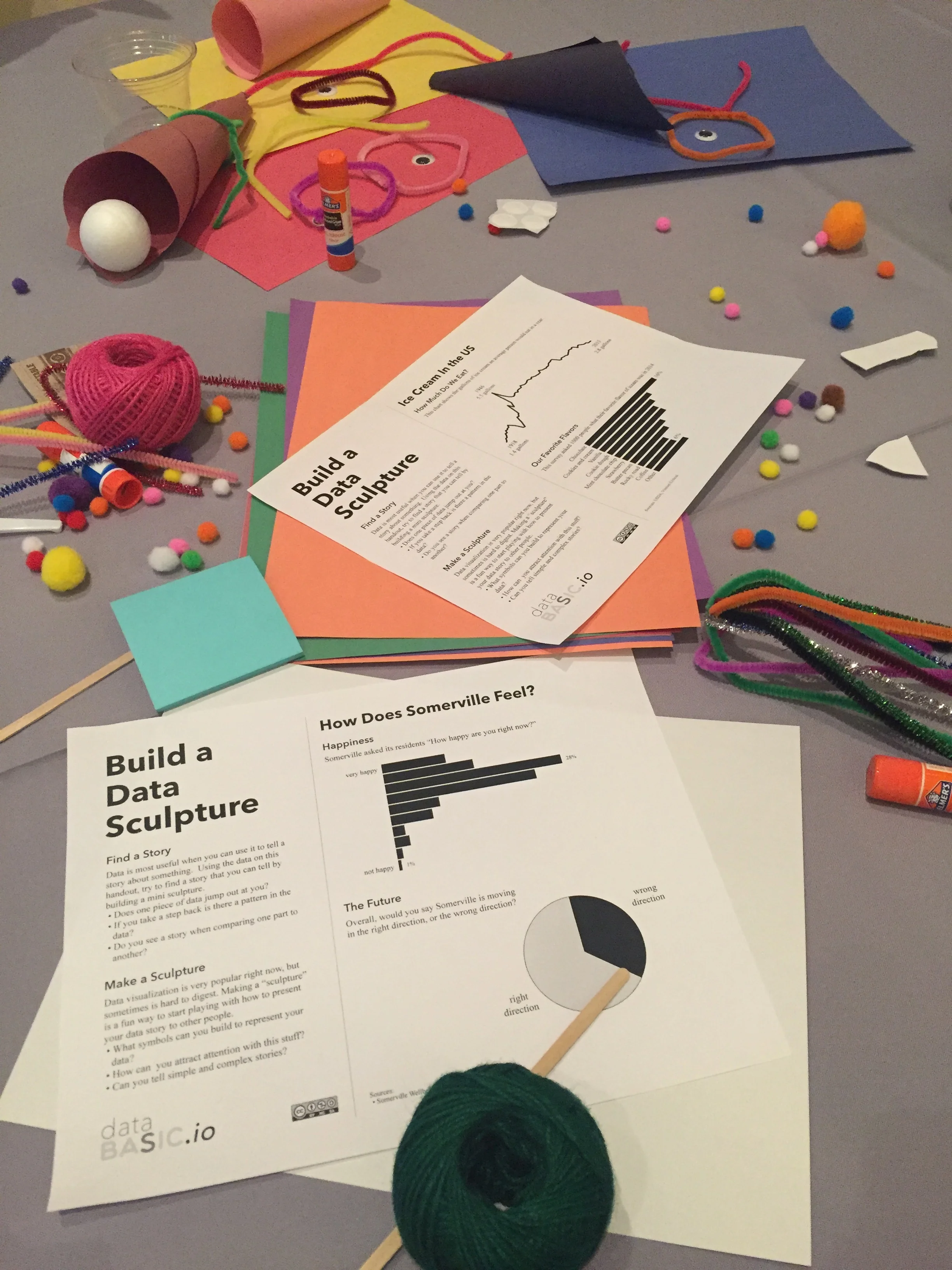

To effectively use data, it is important that everyone across an organization feel comfortable with data. In service of building this capacity, Rahul Bhargava, from MIT Media Labs, and his colleague Catherine D’Ignazio, created the Data Culture Project – a free, self-service data learning program to facilitate fun, creative introductions for non-technical people. The learning tools and activities are hands-on and designed to meet people where they are.

During the conference, we had fun making Data Sculptures – an activity that lets people quickly build sculptures that tell a simple data story with craft materials (the photo on the right shows my table’s sculpture in progress). This playful approach engages participants in thinking about how stories can be found and presented quickly, and helps people feel more freedom and flexibility about data. The activity also breaks down power dynamics that might exist within an organization or community. Everyone, regardless of role or technical expertise with data, is equally contributing to the task.

What I also appreciated about Rahul’s presentation is that he reminded us about why it is vital to make data accessible to all. Rahul shared the dark history of data – when data have been used “as a tool for those in power to consolidate power.” For example, when ancient Egyptians needed to find laborers to build their pyramids they started to collect data about the people around them: which ones they could subjugate to help them build something and which ones they could not. Nazis had IBM run a census to identify the populations that would be put into concentration camps and killed. In order to ensure that data are used in a socially responsible way, it is critical that individuals and communities (especially those who have the most at stake to lose when data is misused) understand and do not feel intimidated by data.

Algorithms and Social Spaces

Organizations actively using data for decision-making are starting to use (or are thinking about using) high-tech tools to assist in this use. Many of these tools are typically driven by computational algorithms. For example, educational institutions are using predictive algorithms to identify students who are at risk of dropping out based on a set of criteria (e.g. low attendance, poor grades, etc.) and targeting them with services to support their academic success.

As algorithms become more prevalent in the way we interface with data, it is critical to consider whether they are being deployed in an ethical way. In their presentation, Mimi Onuhoa and Diana Nucera gave us a crash course on the ethics of algorithms using an activity from their zine A People’s Guide to AI. (I highly recommend that you check out their zine. It uses a popular education approach to demystify data-driven technologies (e.g. machine learning) and to help you think critically about their impact on our society.)

During the session, Mimi and Diana tasked participants with developing an algorithm to invite the ten most “interesting” and “fun” people at the conference to an exclusive after-party. One of the rules of the task was that we could use only publicly available data (e.g. social media) to identify our ten people. This activity provided us with a glimpse into how algorithms can exclude and prioritize certain people based on the data that are inputted into the system. The activity was pretty harmless but it made us consider the impact that this same process can have in a more high-stakes space. In the criminal justice system, for example, predictive policing algorithms are used to decide where police should be sent based on where computer programs predict crime will happen. Given the racial biases baked into policing in this country, communities of color are therefore most likely to be targeted by these algorithms. As Mimi and Diana underscored:

Because we live in a society that is built on top of structural racism and inequity, these technologies don't always end up being good for everyone.

As we build our capacities to use data it is essential that we think about whether the data are being used and deployed in ways aligned with our missions.

WHAT DO YOU THINK?

There were so many other great presentations that got my wheels turning on what’s needed to build data use capacity. It was hard to pick just three!

Did you attend the Data on Purpose conference?

What were your favorite sessions?

What lessons, resources, or inspiration did you take away?